Normalizing and appropriating new scientific findings

This is a long but organized dump of some thoughts on a particular type of distortion that arises from an attitude of conservatism or traditionalism. It is part of a longer-term attempt to understand controversy and novelty, with the practical goal of helping scientists themselves to cut through bullshit and learn how to interpret new findings and reactions to new findings. The topics here in rough order are:

- anachronism and the Sally-Ann test

- the stages of truth, ending with normalization and appropriation

- back-projection as a means of appropriation

- ret-conning as a means of appropriation

- some examples

- the missing pieces theory in historiography

- sub- and neo-functionalization in the gene duplication literature

- “Fisher’s” geometric model

- Haldane 1935 and male mutation bias

- responses to Monroe, et al. (2022)

- ret-conning the Synthesis and the new-mutations view

- “we have long known” claims

- Platonic realism in relation to back-projection

- a gravity model for misattribution

- pushing back against appropriation

Sally-Ann and the stages of truth

When a new finding appears, this changes the world of knowledge and our perspective on the state of knowledge. After a new result has appeared, reconstructing what the world looked like before the new result can be difficult.

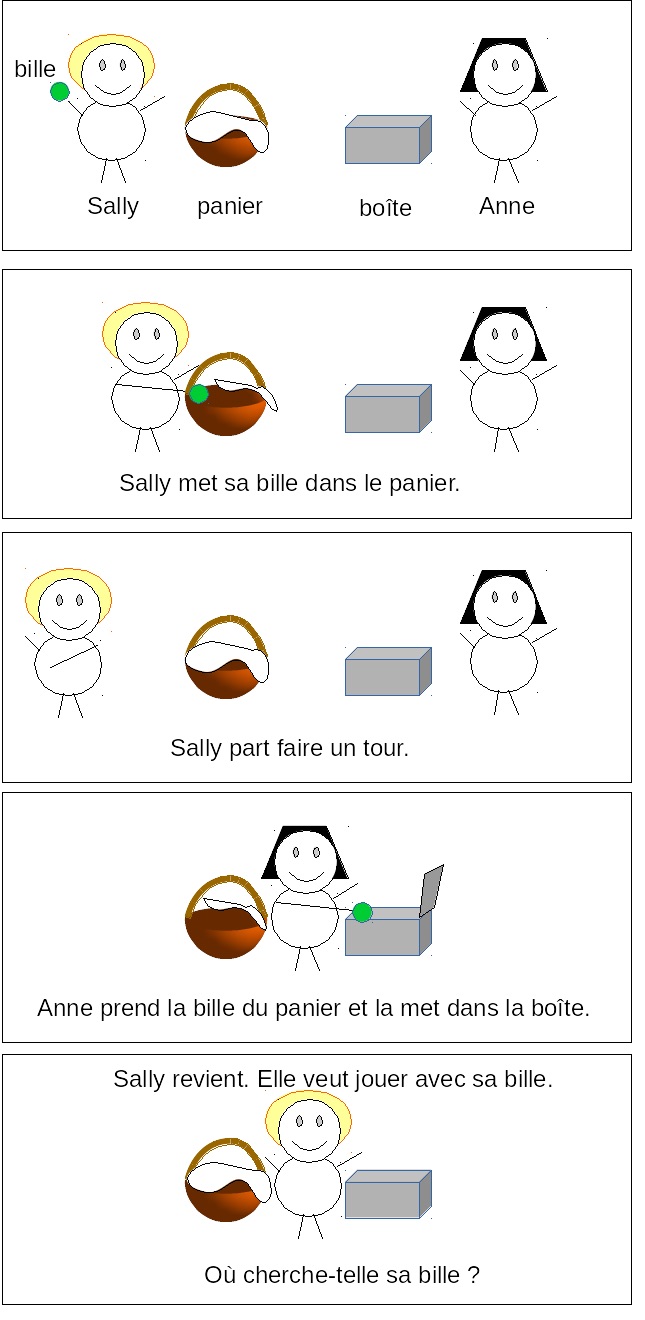

Indeed, for young children, understanding what the world looks like from the perspective of someone with incomplete knowledge is literally impossible. This issue is probed by the “Sally-Ann” developmental test, in which a child is told a story with some pictures, and then is asked a question. The story is that Sally puts a toy in her basket, then leaves, and before she returns, Ann moves the toy to her box. The question is where will Sally look for the toy, in the basket or in the box? A developmentally typical adult will say that Sally will look in the basket where she put it. Sally’s state of knowledge is different from ours: she doesn’t know that her toy was moved to the box.

But children under 4 typically say that Sally will look in the box. They have not acquired the mental skill of modeling the perspective of another person with different information. In their only model of the world— the world as seen from their own perspective—, the toy is in the box, so they assume that this is also Sally’s perspective, and on that basis, they predict that Sally will look in the box.

The most egregious kinds of scientific anachronism have the same flavor as this childish error, e.g., describing Darwin’s theory as one of random mutation and selection. It is notoriously difficult for us to forget genetics and comprehend pre-Mendelian thinking on heredity and evolution. For this reason, one often hears the notion that Mendelism supplies the “missing pieces” to Darwin’s theory of evolution, as if Darwin articulated a theory with a missing component the precise shape of Mendelian genetics, yet did not foresee Mendelian genetics.

Historian Peter Bowler loves to mock the missing-pieces story. Darwin did not, in fact, propose a theory with a hole in it for Mendelism: he proposed a non-Mendelian theory based on the blending of environmental fluctuations under the struggle for life, which Johannsen then refuted experimentally. Historian Jean Gayon wrote an entire book about the “crisis” precipitated by Darwin’s errant views of heredity. Decades passed before Darwin’s followers threw their support behind a superficially similar theory combining a neo-Darwinian front end with a Mendelian back end. Then they shut their eyes tightly, made a wish, and the original fluctuation-struggle-blending theory mysteriously vanished from the pages of the Origin of Species. They can’t see it. They can’t see anything non-Mendelian even if you hold the OOS right up in front of their faces and point to the very first thing Darwin says in Ch. 1. All they see is a missing piece. This act of mass self-hypnosis has endured for a century.

Normalization: the stages-of-truth meme

Anachronistic attempts to make sense of the past fit a pattern of normalization suggested by the classic “stages of truth” meme (see the QuoteInvestigator piece), in which a bold new idea is first dismissed as absurd, then challenged as unsupported, then normalized. Depictions of normalization emphasize either that (1) the new truth is declared trivial or self-evident (e.g., Schopenhauer’s trivial or selbverständlich), or (2) its origin is pushed backwards in time and credited to predecessors, e.g., Agassiz says the final stage is “everybody knew it before” and Sims says

For it is ever so with any great truth. It must first be opposed, then ridiculed, after a while accepted, and then comes the time to prove that it is not new, and that the credit of it belongs to some one else.

This phenomenon is something that deserves a name and some careful research (such research may exist already, but I have not found it yet in the scholarly literature). The general pattern could be called normalization (making something normal, a norm) or appropriation (declaring ownership of new results on behalf of tradition). Normalization or appropriation is a general pattern or end-point for which there are multiple rhetorical strategies. I use the term “back-projection” when contemporary ideas are projected naively onto progenitors, and I sometimes use “ret-conning” when there is a more elaborate kind of story-telling that anchors new findings in tradition and links them to illustrious ancestors. Recognizing these tactics (and the overall pattern) can help us to cut through the bullshit and assess more objectively the relationship of new findings or current thinking to the past.

Back-projection examples (DDC model and Monroe, et al 2022)

The contemporary literature on gene duplication features a common 3-part formula with consistent language for what might happen when a genome contains 2 copies of a gene: neo-functionalization, sub-functionalization or loss (or pseudogenization).

This 3-part formula began to appear after the sub-functionalization model was articulated in independent papers by Force, et al. (1999) and Stoltzfus (1999). Each paper presented a theory of duplicate gene establishment via subfunctionalization, and then used a population-genetic model to demonstrate the soundness of the theory. In this model, each copy of a gene loses a sub-function, such as expression in a particular tissue, but the loss is genetically complemented by the other copy, so that the two genes together are sufficient to do what one gene did previously. Force, et al. called their model the duplication-degeneration-complementation (DDC) model; the model of Stoltzfus (1999) was presented as a case of constructive neutral evolution.

The appearance of this new and somewhat subversive theory— calling on neutral evolution to account for a pattern of apparent functional specialization— sparked a renewed interest in duplicate gene evolution that has been surprisingly durable, continuing to the present day. The article by Force, et al has been cited over 2000 times. That is a huge impact!

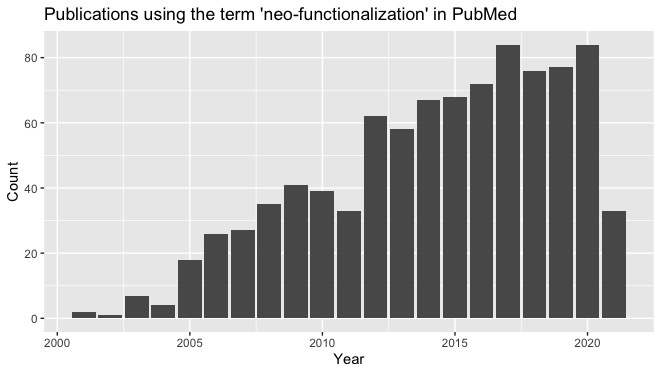

As noted, the emergence of this theory induced the use of a now-familiar 3-part formula. Along with this came a shift in how existing concepts were described, using the neat binary contrast of sub versus neo, i.e., “neo-functionalization” refers to the classic idea that a duplicate gene gains a new function, yet the term itself is not traditional, but spread beginning with its use by Force, et al (1999), as shown in this figure.

Then the back-projection began. Even though this 3-part formula emerged in 1999, references in the literature (e.g., here) began to attribute it to an earlier piece by Austin Hughes that does not propose a model for the preservation or establishment of duplicate copies by subfunctionalization. Instead, Hughes (1994) argued that new functions often emerge within one gene (“gene sharing”) before gene duplication proceeds, i.e., Hughes proposed dual-functionality as an intermediate stage in the process of neo-functionalization (see the discussion on Sandwalk):

A model for the evolution of new proteins is proposed under which a period of gene sharing ordinarily precedes the evolution of functionally distinct proteins. Gene duplication then allows each daughter gene to specialize for one of the functions of the ancestral gene.

Hughes (1994)

Over time, the back-projection became even more extreme: some sources began to attribute aspects of this scheme to Ohno (1970), e.g., here, or when Hahn (2009) writes:

In his highly prescient book, Susumu Ohno recognized that duplicate genes are fixed and maintained within a population with 3 distinct outcomes: neofunctionalization, subfunctionalization, and conservation of function.

What, precisely, is Hahn saying here? He does not directly attribute the DDC model to Ohno. He seems to refer primarily to outcomes rather than to processes, leaving room for interpretation. Perhaps there is some subtle way in which it is legitimate to apply the word “subfunctionalization” anachronistically, but it isn’t clear what exactly Ohno said that justifies this statement. Of course, Ohno did not use the term “neo-functionalization” either, but there is no anachronism in applying it, because the term was invented specifically as the label for the old and familiar idea of gaining a new function. Again, Hahn does not say explicitly and clearly that the subfunctionalization model comes from Ohno, but this is what the reader will assume.

And this is where the ingenuity of back-projection goes wrong: the more clever you are in weaving a thread backwards from the present into the past, spinning a story that connects current thinking to older sources— older sources that actually used different language and explored different ideas—, the more likely that you are just going to mislead people.

Obviously any new theory or finding will have some aspects that are not new. A common strategy of appropriation is to point to familiar parts of a new finding, and present those as the basis to claim that the finding is not new. One version of this tactic is to focus on a phenomenon or process that features either as a cause or an effect in a new theory, and then claim that, because this part was recognized earlier, the theory is not new. For instance, Niche Construction Theory (NCT) is about the reciprocal ways in which organisms both adapt to, and modify, their environment. However, naturalists have recognized for centuries that organisms modify their environment, e.g., beavers build dams and earthworms aerate and condition the soil. Therefore, strategies of appropriation by traditionalists (e.g., Wray, et al; see Stoltzfus, 2017) focus on the way that authors such as Darwin noted how earthworms modify their environment, claiming that this undermines the novelty of NCT.

If this kind of argument were valid, it would mean that we have no need for genuine causal theories in science, e.g., theories that induce sophisticated mathematical relations between measurable quantities, because it equates the recognition of an effect with a theory for that effect. In the Origin of Species, Darwin explicitly and repeatedly invoked 3 main causes of evolutionary modification: natural selection, use and disuse, and direct effects of environment. He did not list niche construction. Saying that niche construction theory is not novel on the grounds that the phenomenology it was designed to explain was noticed earlier is like saying that Newton’s theory of gravity was not novel because humans, going back to ancient times, already knew that heavy things fall [7].

A variety of anachronisms, misapprehensions, and other pathologies of normalization were evident in responses to the recent report by Monroe, et al. (2022) of a genome-wide pattern in Arabidopsis of an anti-correlation between mutation rate and functional density [3]. One commentary was entitled “Who ever thought genetic mutations were random?“, which is outright scientific gaslighting. Another commentary stated that “Scientists have been demonstrating that mutations don’t occur randomly for nearly a century” citing a 1935 paper from Haldane that does not explicitly invoke either random or non-random mutation, and does not report any systematic asymmetry or bias in mutation rates.

I was so mystified by this citation that I read Haldane’s paper line by line about 4 times, and finding nothing, used an online service (scite) to examine the context for about 70 citations to Haldane’s 1935 paper. I found that the paper was mainly cited for the reasons one would expect (sex-linked diseases, mutation-selection balance) until about 2 decades ago, when male mutation bias began to be a hot topic, and then scientists began to cite Haldane’s paper as though this were a source of the idea.[which is in Haldane (1947): for details, see note 8] In fact, Haldane (1935) does not propose male mutation bias. The closest that he gets to this possibility is to present a mutation-selection balance model for sex-linked diseases with separate parameters for male and female mutation rates, though ultimately his actual estimate is a single rate inferred from the frequency of haemophilic males (“x” in his notation). That is, male mutation bias was back-projected to Haldane in expert sources (an example is shown in the figure below), then this pattern was twisted into an even more bizarre claim in the newsy piece about Monroe, et al (and whereas the authors who originated and propagated this myth probably never stopped to ponder what they were doing, I spent multiple hours checking my own work, illustrating Brandolini’s law: debunking bullshit takes 10 times the effort as producing it).

An important lesson to draw from such examples is that when new results are injected into evolutionary discourse, this provokes new statements, even if the form of those new statements is a novel defense of orthodoxy, e.g., outrageous takes like “Who ever thought genetic mutations were random?“ or “Scientists have been demonstrating that mutations don’t occur randomly for nearly a century.” That is, the publication of Monroe, et al. caused these novel sentences to come into existence, as a form of normalization.

More generally, new work may induce increased attention to older work, rightly or wrongly. The extreme case would be a jump in attention to Mendel’s work, not when it was published in 1865, but when it was “rediscovered” in 1900 [6]. The appearance of Monroe, et al. (2022) stimulated a jump in attention to earlier work from Chuang and Li (2004) and Martincorena, et al (2012). Renewed attention to relevant prior work is salutary in the sense that (1) the later work increases the posterior probability of the earlier claims, and (2) this re-evaluation rightfully draws our attention. However, this is not salutary if (1) the earlier studies failed to have an impact for any reason, but particularly because they were not as convincing, and (2) their existence is now being used retroactively to make a case against the novelty of subsequent work. The work of Martincorena, et al. stimulated a backlash at the time; Martincorena wrote a rebuttal but never published it (it’s still on biorxiv), and then got out of evolutionary biology, escaping our toxic world for the kinder gentler field of cancer research. But now his work (and the work of Chuang and Li) is put forth as the basis of “we have long known” claims attempting to undermine the novelty of Monroe, et al. (e.g., this and other rhetorical strategies are used to undermine the novelty of Monroe, et al in this video from a youtube science explainer).

“Fisher’s” geometric model

As a more extended example of back-projection, consider the case of “Fisher’s geometric model.”

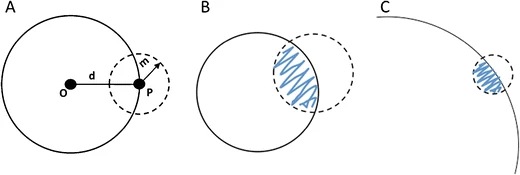

Given a range of effect-sizes of heritable differences from the smallest to the largest, i.e., effects that might be incorporated in evolutionary adaptation, which size is most likely to be beneficial? Fisher (1930) answered this question with his famous geometric model. The chance of a beneficial effect is a monotonically decreasing function of effect-size, so that the smallest possible effects have the greatest chance of being beneficial. Fisher concluded from this that the smallest changes are the most likely in evolution, i.e., adaptation will occur gradually, by infinitesimals. To put this in more formal terms, for any size of change d, Fisher’s model allows us to compute a chance of being beneficial b = Pr(s > 0), and he showed that b approaches a maximum, b → 0.5, as d → 0.

Kimura (1983) revisited this argument 50 years later, but from the neo-mutationist perspective that emerged among molecular evolutionists in the 1960s, and which gave rise to the origin-fixation formalism (McCandlish and Stoltzfus, 2014). That is, Kimura treated the inputs as new mutations subject to fixation, rather than as a shift defined phenotypically, or defined by the expected effect of allelic substitution from standing variation. Each new mutation has a probability of fixation p that depends, not merely on whether the effect is beneficial, but how strongly beneficial it is. Mutations with bigger effects are less likely to be beneficial, but among the beneficial mutations, the ones with bigger effects have higher selection coefficients, and thus are more likely to reach fixation. Meanwhile, as d → 0, the chance of fixation simply approaches the neutral limit, i.e., the mutations with the tiniest effects behave as neutral alleles whether they are beneficial or not.

So, instead of Fisher’s argument with one monotonic relationship dictating that the chances of evolution depend on b, which decreases with size, we now have a second monotonic relationship in which the chances of evolution depend on p that (conditional on being beneficial) increases with size. The combination of the two opposing effects results in an intermediate optimum.

Thus Kimura transformed and recontextualized Fisher’s geometric argument in a way that changes the conclusion and undermines Fisher’s original intent, which was to support infinitesimalism. This is because Kimura’s conception of evolutionary genetics was different from Fisher’s.

The radical nature of Kimura’s move is not apparent in the literature of theoretical evolutionary genetics, where “Fisher’s model” often refers to Kimura’s model (e.g., Orr 2005a, Matuszewski, et al 2014, Blanquart, et al. 2014). Some authors have been explicit in back-projecting Kimura’s mutationist thinking onto Fisher, e.g., to explain why Fisher came to a different conclusion, Orr (2005a) suggests that Fisher made a mistake in forgetting to include the probability of fixation

“Fisher erred here and his conclusion (although not his calculation) was flawed. Unfortunately, his error was only detected half a century later, by Motoo Kimura”

Orr (2005b) states that “an adaptive substitution in Fisher’s model (as in reality) involves a 2-step process.”

But Fisher himself did not specify a 2-step process as the context for his geometric argument: he did not provide an explicit population-genetic context at all. However, we have no reason to imagine that Fisher was secretly a mutationist. His view of evolution as a deterministic process of selection on available variation is well known, i.e., the missing pop-gen context for Fisher’s argument would look something like this: Evolution is the process by which selection leverages available variation to respond to a change in conditions. At the start of an episode of evolution, the frequencies of alleles in the gene pool reflect historical selection under the previously prevailing environment. When the environment changes, selection starts to shift the frequencies to a new multi-locus optimum: most of them will simply shift up or down partially; any unconditionally deleterious alleles will fall to their deterministic mutation-selection balance frequencies; any unconditionally beneficial ones will go to fixation deterministically. The smallest allelic effects are the most likely to be beneficial, thus they are the most likely to contribute to adaptation.

The fixation of new mutations is not part of this process, and that, surely, is why the probability of fixation plays no part in Fisher’s original calculation. Instead, all one needs to know is the chance of being beneficial as a function of effect-size. Fisher’s argument is complete and free of errors, given the supposition that evolution can be adequately understood as a deterministic process of shifting frequencies of available variation in the gene pool.

I recently noticed that Matt Rockman’s (2012) seminal reflection on the limits of the QTN program presents a nearly identical argument in his supplementary notes (i.e., 5 years before the longer version I put in the supplement to Stoltzfus 2017):

3. Note that while Fisher was concerned with the size distribution of changes that improve the conformity of organism and environment (i.e., adaptation), Kimura (1983, section 7.1) was discussing the effect size distribution of adaptive substitutions, i.e., his is a theory of molecular evolution. Though many now describe Kimura’s work as correcting Fisher’s mistake, it is not clear that there is a mistake: Fisher was concerned not with fixation but with adaptation. Kimura for one seems not to have thought that he was correcting an error made by Fisher (Kimura 1983, p. 150-151). Though the distributions derived by Fisher and Kimura are both relevant to adaptation, Fisher’s model is compatible with adaptation via allele frequency shifts in standing variation. In Fisher’s words, “without the occurrence of further mutations all ordinary species must already possess within themselves the potentialities of the most varied evolutionary modifications. It has often been remarked, and truly, that without mutation evolutionary progress, whatever direction it may take, will ultimately come to a standstill for lack of further possible improvements. It has not so often been realized how very far most existing species must be from such a state of stagnation” (Fisher 1930, p. 96).

Relative to the case above regarding gene duplications, this case of back-projecting Kimura’s view to Fisher results in a more pernicious mangling of history: attributing to Fisher a model based on a mutationist mode of evolution not formalized until 1969 after Fisher was dead, and which contradicts Fisher’s most basic beliefs about how evolution works (along with the clear intent of the Modern Synthesis architects to exclude mutationist thinking as an apostasy).

Synthesis apologetics

But these examples are mild compared to the ret-conning that has emerged in debates over the “Modern Synthesis.” In serial fiction, ret-conning means re-telling an old story to ensure retroactive continuity with new developments that the writers added to the storyline in a subsequent episode, e.g., when a character that died previously is brought back to life. The difference between a retcon and simple back-projection is perhaps a matter of degree. The retcon is a much more conscious effort to re-tell the past in order to make sense of the present. The “Synthesis” story is very deliberately ret-conned to appropriate contemporary results. In a different world, the defenders of tradition might have declared that the definitive statement is in that 1970s textbook by Dobzhansky, et al.; they might have stopped writing defenses and just posted a sign saying “See Dobzhansky, et al. for how the Synthesis answers evolutionary questions.” But instead, defenders keep writing new pieces that expand and reinterpret the Synthesis story to maintain an illusion of constancy.

Futuyma is the master of the Synthesis retcon. He has a craftsman’s respect for the older storylines, because he helped write them, so his retcons are subtle and sometimes even artful. We can appreciate the artistry with which he has subtly pulled back from the shifting gene frequencies theory and the grand claims he made originally in 1988 on behalf of the Synthesis. In the original Synthesis storyline, the MS restored neo-Darwinism, crushed all rivals (mutationism, saltationism, orthogenesis, etc), and provided a common basis for anyone in the life sciences or paleontology to think about evolution.

By contrast, the retcons from the newer traditionalists are full of bold anachronisms. Svensson (2018) mangles the Synthesis timeline by calling on Lewontin’s (1985) advocacy of reciprocal causation to appropriate niche construction, as if the year 1985 were not several decades after the architects of the Modern Synthesis declared victory at the 1959 Darwin centennial. Lewontin (1985) himself says that reciprocal causation was not part of the Darwinian received view in 1985. Welch (2017), dismayed by incessant calls for reform in evolutionary biology, suggests that this reflects intrinsic features of the problem-space: naive complaints are inevitable, he argues, because no single theory can cover such a large field with diverse phenomenology subject to contingency. That is, Welch is boldly erasing the original Synthesis story in which Mayr, et al. explicitly claimed to have unified all of biology (not just evolution, but biology!) with a single theory. Whereas the “contingency” theme emerged after the great Synthesis unified biology, Welch treats this as a timeless intrinsic feature that makes any simple unification impossible (see Platonic realism, below).

Svensson (e.g., here or here) has repeatedly turned history on its head by suggesting that evolution by new mutations is the classical view of the Modern Synthesis and that the perspective of evolutionary quantitative genetics (EQG), i.e., adaptation by polygenic shifting of many small-effect alleles, has been marginalized until recently. To anchor this bold anachronism in published sources, he calls on a marginalization trope from the recent literature on selective sweeps, in which some practitioners sympathetic to EQG looked back— on the scale of 10 or 20 years— to complain that the EQG view was neglected and that the view of hard sweeps from a rare distinctive mutation was the classic view (some of these quotations appear in The shift to mutationism is documented in our language).

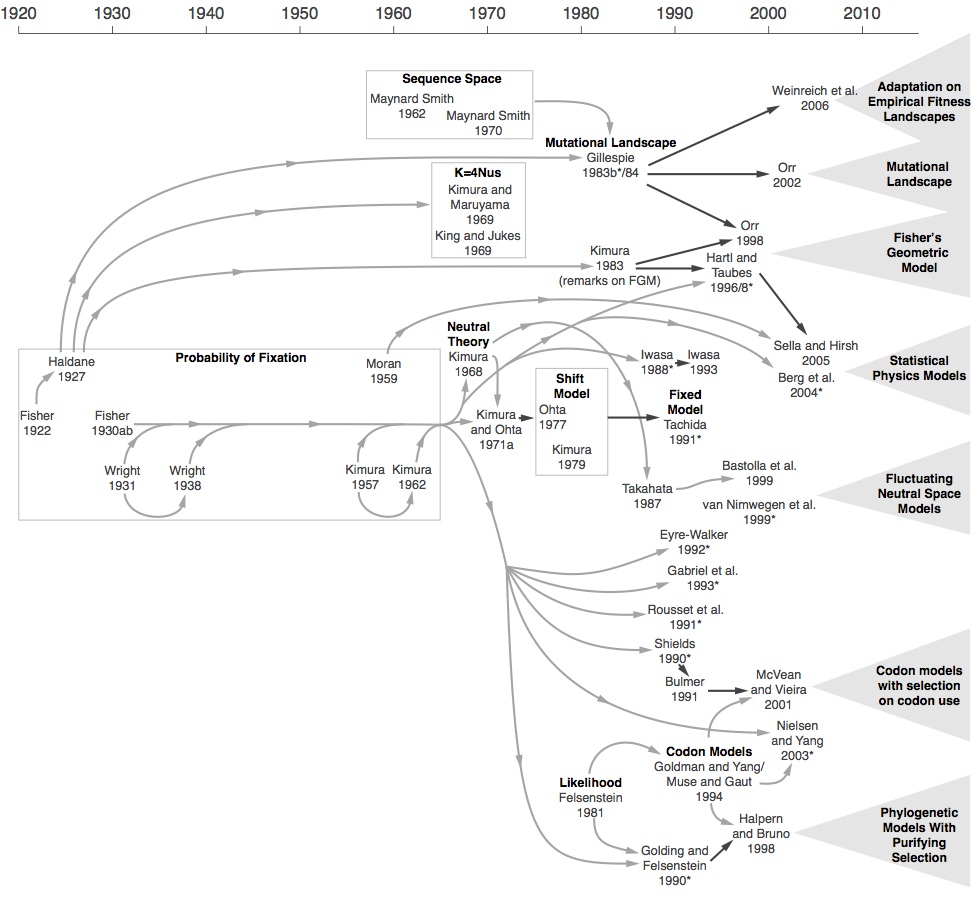

Indeed, hard sweeps are easier to model and that is presumably why they came first, going way back to Maynard Smith and Haigh (1974). And the mini-renaissance of work on the genetics of adaptation from Orr and others beginning in the latter 1990s— work that received a lot of attention— was based on new mutations. But that’s a very shallow way of defining what is “classic” or “traditional.” The mini-renaissance happened (after decades of inactivity) precisely because theoreticians were suddenly exploring the lucky mutant view they had been ignoring or rejecting (Orr says explicitly that the received Fisherian view stifled research by making it seem like the problem of adaptation was solved). The origin-fixation formalism only emerged in 1969, and for decades, this mutation-limited view was associated with neutrality and molecular evolution (see the figure below from McCandlish and Stoltzfus, 2014).

Rockman (2012) again gets this right, depicting the traditional view (from Fisher to Lewontin and onward) as a change in allele frequencies featuring polygenic traits with infinitesimal effects from standing variation:

“Despite the centrality of standing variation to the evolutionary synthesis and the widely recognized ubiquity of heritable variation for most traits in most populations, recent models of the genetics of adaptive evolution have tended to focus on new-mutation models, which treat evolution as a series of sequential selective sweeps dependent on the appearance of new beneficial mutations. Only in the past few years have phenotypic and molecular population genetic models begun to treat adaptation from standing variation seriously (Orr and Betancourt 2001; Innan and Kim 2004; Hermisson and Pennings 2005; Przeworski et al. 2005; Barrett and Schluter 2008; Chevin and Hospital 2008).”

To summarize, Svensson’s QEG-marginalization narrative turns history upside down in order to retcon contemporary thinking, i.e., he creates a false view of tradition in order to claim that new work with a mutationist flavor is traditional.

This anachronistic approach makes Svensson and Welch more effective in some ways than Futuyma, because they are really just focused on telling a good story, without being constrained by historical facts. But sometimes fan-service means sticking more closely to tradition. Even die-hard Synthesis fans are going to be complaining about Svensson’s fabrications, because they go beyond ret-conning into the realm of gas-lighting, undermining our shared understanding of the field, e.g., population geneticists (and most everyone else, too?) understand the “Fisherian view” of adaptation to be precisely the view that, according to Svensson, was marginalized in the Modern Synthesis. Clearly if anyone is going to take over the mantle from Futuyma and write the kind of fiction needed to keep the Synthesis brand fresh, the franchise needs a better crop of writers, or else needs to develop a fan-base that doesn’t care about consistency.

How to understand this phenomenon

Presumably if a grad student were to ask a genuine expert on gene duplication for the source of the sub-functionalization model, so as to study its assumptions and implications, they would be instructed to read the papers from 1999 or subsequent ones, and not Hughes (1994) or Ohno (1970), because the model is simply not present in these pre-1999 papers. Likewise, “Fisher’s geometric model” in Kimura’s sense is not in Fisher (1930). The theory of biases in the introduction process (or any model of this kind of effect) is absent from Kimura’s book and other works (e.g., from Dobzhansky, Haldane and Fisher) suggested as sources by Svensson and Berger (2019).

In this sense, back-projection is a mode of generating errors in the form of false attributions.

Why does this happen?

A contributing sociological factor is that, in academia, linking new ideas to prior literature, and especially to famous dead people, is a performative act that brings rewards to the speaker. Referencing older literature makes you look smart and well read, and also displays a respectful attitude toward tradition that is prized in some disciplines. And then those patterns of attribution get copied. When some authors started citing Haldane (1935) for male mutation bias, others simply copied this pattern (and resisting the pattern presumably would entail a social cost).

The extreme form of this performative act, a favorite gambit of theoreticians, is to dismiss new theoretical findings by saying “this is merely an implication of…” citing some ancient work. Indeed, many “we have long known” arguments defend the fullness and authority of tradition, in the face of some new discovery X, by saying “we have long known A and B”, where A and B can be construed to imply or allow X. Why don’t the critics undermine the novelty of X by saying “we have long known X”? Because they can’t. If X were truly old knowledge, the critics would just cite prior statements of X, following standard scientific practice. But when X is genuinely new, the defense of the status quo resorts to the implicit assumption that a result isn’t new and significant if someone could have reasoned it out from prior results, even if they did not actually do so. This implies the outrageously wrong notion that science is a perfect prediction machine, i.e., feed it A and B, and it auto-generates all the implications that will become important in the future. Clearly this is not how reality works (but see Platonic realism below).

Professional jealousy is a contributing factor when scientists offer their opinion on new findings, especially when those new findings are generating attention. I’m not going to dwell on this but it’s obviously a real thing.

Likewise, politics come into play when pundits and opinion leaders are called on to comment on new work. In an ideal world, when a new result X appears, we would just call on the people genuinely interested in X, the ones best positioned to comment on it, and they would only accept the challenge if they have digested the new result X [5]. But if X is new, how do we know who is best qualified? If X crosses boundaries or raises new questions, how do we know who has thought deeply about it? Often reporters will rely on the same tired old commentators to explain Why Orthodoxy Is True. The ones who step willingly into this role are often the ones most deeply invested in maintaining the authority of the status quo, the brand-value of mainstream acceptable views of evolution. Genuinely new findings undermine their brand. It’s a dangerous situation today when so many evolutionists have publicly signaled a commitment to the belief that a 60-year-old conception of evolution is correct and sufficient, that this theory cannot be incorrect, only incomplete (p. 25 of Buss, 1987), indeed, when some even go so far as to insist that nothing fundamentally new remains to be discovered (Charlesworth, 1996). Given this commitment to tradition, how could they possibly respond to a genuinely new idea except by (1) rejecting it or (2) shifting the goal-posts to claim it for tradition? Either way, this attitude degrades scientific discourse.

A completely different way to think about back-projection and ret-conning — independent of motivations and power struggles — is that they reflect a mistaken conception of scientific theories. Philosophers and historians typically suppose that scientific theories are constructed by humans, in a specific historic context, out of things such as words, equations, and analogies. The theory does not exist until the point in time when it is constructed, or perhaps, the point when it appears in scientific discourse. Under this kind of view, the DDC subfunctionalization model did not exist until 1999, Kimura’s mutationist revision of Fisher’s argument did not exist until 1983, and the theory of biases in the introduction process did not exist until 2001.

However, scientists themselves often speak as if theories exist independently of humans, as universals, and are merely recognized or uncovered at various points in time, e.g., note Hahn’s use of “recognized” above. In philosophy, this is called “Platonic realism.” The theory is a real thing that exists, independent of time or place. It’s hard to resist this. I do it myself instinctively. When I look back at King (1971, 1972), it feels to me like he is trying (without complete success) to state the theory we stated in 2001.

This has an important implication for understanding how scientists construct historical narratives, and how they interpret the historical canon. In the Platonic view, there is a set of universal time-invariant theories T1, T2, T3 etc, and anything written by anyone at any period in time can refer to these theories. In particular, anyone can see a theory like the DDC theory partly or incompletely, without clearly stating the theory or listing its implications. It’s like in the parable of the blind men and the elephant, where each person senses and interprets a thing, without construing it as an elephant.

By contrast, in the constructed view, if no one construes an elephant, describing the parts and how they fit together, there is no elephant, there is just a snake and a fan and a tree trunk and so on.

If we adopt the Platonic view, we will naturally tend to suppose that terms and ideas from the past may be mapped to each other and to the present, because they are all references to universal theories that have always existed. Clearly the Platonic view underlies the missing-pieces theory. Likewise, if one holds this view, one may imagine that Hughes or Ohno glimpsed the sub-functionalization theory, without fully explaining the theory or its implications. They sensed a part of the elephant. Likewise, the Platonic view is at work in Orr’s framing, which suggests that Fisher entertained a mutationist conception of evolution as a 2-step origin-fixation process, according to Kimura’s theory, but perhaps saw it imperfectly, resulting in a mistake in his calculation. Svensson and Berger (2019) likewise suggest that Dobzhansky, Fisher and Haldane understood implications of a theory of biases in the introduction process (first published in 2001), even though those authors never explicitly state the theory or its implications.

By contrast, a historian or philosopher considering the concepts implied by a historical source does not insist on mapping them to the present on an assumption of Platonic realism or continuity with current thinking. In fact, just like the practitioners of any scholarly discipline make distinctions that are invisible to novices, it is part of the craft of history to notice and articulate how extinct authors thought differently. For instance, the careful reader of Ohno (1970) surely will notice that his usage of the term “redundancy” often implies a unary concept rather than a relation. That is, Ohno often specifies that gene duplication creates a redundant copy, i.e., a copy with the property of being redundant, which makes it free to accumulate forbidden mutations, as if the original (“golden”) copy has been supplemented with a photocopy or facsimile that is a subtly different class of entity. By contrast, the logic of the DDC model is based on treating the two gene copies as equivalent. We think of redundancy today as a multidimensional genomic property that is distributed quantifiably across genes.

This is how Platonic realism encourages and facilitates back-projection, especially when combined with confirmation bias and the kind of ancestor-worship common in evolutionary biology. If theories are universal and have always existed, then it must have been the case that any theory available today also was accessible to illustrious ancestors like Darwin and Fisher. They may have recognized or seen the theory in some way, perhaps only dimly or partly; their statements and their terminology can be mapped onto the theory. So, the reader who assumes Platonic realism and is motivated by the religion of ancestor-worship can explore the works of predecessors, quote-mining them for indications that they understood the Neutral Theory, mutationism, the DDC model, and so on.

Again, a distinctive feature of the Platonic view is that it provides a much broader justification for back-projection, because it allows for a theory to be sensed without fully grasping it or getting the implications right, like sensing only part of the elephant. So the test case distinguishing theories about theories is this: if a historic source has a collection of statements S that refers to parts of theory T without fully specifying the theory, and perhaps also features inconsistent language or statements that contradict implications of T, we would say under the constructed view that S lacks T, but under the Platonic view, we might conclude that the S refers to T but does so in an incomplete or inconsistent way.

A model for misattribution

When we are back-projecting contemporary ideas, drawing on Platonic realism while virtue-signaling our dedication to tradition and traditional authorities, what sources will we use? Of course, we will use the ones we have read, the ones on our bookshelf, the ones by famous authors, the ones that everyone else has on their bookshelves. We will simply draw on what is familiar and close at hand.

Thus, the practice of back-projection will make links from contemporary ideas to past ideas, and it will tend to make those links by something like what is called a “gravity model” in network modeling, where the activity or importance or capacity associated with a node is equated with mass and used to model links to other nodes. The force of back-projection from A to B, e.g., the chance that the neutral theory will be linked to Darwin, will depend on the importance of A and B, and how close they are in a space of ideas.

In more precise terms, the force of gravitational attraction between objects 1 and 2 is proportional to m1m2 / d2, i.e., the product of the masses divided by their distance squared. In a network model of epidemiology, we might treat persons and places of employment as the objects subject to attraction. Each person has a location and 1 unit of mass, and each workplace has a location and n units of mass where n is the number of employees. For a given person i, we assign a workplace j by sampling from available workplaces with a chance proportional to mj / dij2, the mass (number of employees of the workplace) divided by the squared distance from the person. Smaller workplaces tend to get only local workers, while larger ones draw from a larger area.

Imagine that concepts or theories or conjectures can be mapped to a conceptual hyperspace. This space could be defined in many ways, e.g., it could be the result of applying some machine-learning algorithm. We can take every idea from canonical sources, each ic, and map it in this space, along with every new idea, each in. Any two ideas are some distance d from each other. Any new idea has some set of neighboring ideas, including other new ideas and old ideas from canonical sources. Among the ideas from canonical sources, there is some set of nearest neighbors, and each one has some distance dnc from the target idea to be appropriated.

To complete the gravity model for appropriation, we need to assign different masses to the neighbors of a target idea, and perhaps also assign a mass to the target idea as well. Given the nature of appropriation, a suitable metric for the mass of ideas from the canon mc would be the reputation or popularity of the source, e.g., an idea from Darwin would have a greater mass than from Ford, which would have a greater mass than an idea from someone you have never heard of. If so, the force of back-projection linking a new idea to something from the canon would be proportional to mc / dnc2, assuming that back-projection acts equally on all non-canonical ideas, i.e, they all have the same mass. If more important ideas stimulate a stronger force of back-projection— because traditionalists are more desperate to appropriate new ideas if they are important— then we could also assign an importance mn to the new idea and then the force of appropriation would be mnmc / dnc2.

Thus, the more important a new theory, the greater the pressure to back-project it to traditional sources. The more popular a historic source, the more likely scientists will attribute a new theory to it. If two different historic sources suggest ideas that are equally close to a new theory (i.e., same dnc) the one with the higher mass mc (e.g., popularity) is more likely to be chosen as the target of back-projection.

If the chances of back-projection follow this kind of gravity model, then clearly back-projection is an effective system for diverting credit from contemporary scientists and lesser-known scientists of the past, to precisely those dead authorities who already receive undue attention. Under a gravity model for misattribution, Darwin is going to get credited with all sorts of ideas, because he is already famous and he wrote several sprawling books that are available on bookshelves of scientists, even the ones who have no other books from 19th-century authors who might have said it better than Darwin. If one has very low standards for what constitutes an earlier expression of a theory, then it is easy to find precursors.

Resisting back-projection

The two most obvious negative consequences of back-projection and ret-conning are that they (1) encourage inaccurate views of history and (2) promote unfair attribution of credit.

However, those are just the obvious and immediate consequences.

Back-projection and anachronism have contributed to a rather massive misapprehension of the position on population genetics underlying the Modern Synthesis, directly related to the “Fisher’s geometric model” story above. Beatty (2022) has written about this recently. I’ve been writing about it for years. Today, many of us think of evolution as a Markov chain of mutation-fixation events, a 2-step process in which “mutation proposes, selection disposes” (decides). If you asked us what is the unit step in evolution, we would say it is the fixation of a mutation, or at least, that fixations are the countable end-points. The latter perspective is sometimes arguably evident in classic work, e.g., the gist of Haldane’s approach to the substitution load is to count how many allele replacements a population can bear. But more typically, this kind of thinking is not classical, but reflects the molecular view that began to emerge among biochemists in the 1960s.

The origin-fixation view of evolution from new mutations, where each atomic change is causally distinct, is certainly not what the architects of the Modern Synthesis had in mind. The MS was very much a reaction against the non-Darwinian idea of a “lucky mutant” view in which the timing and character of episodes of evolutionary change depend on the timing and character of events of mutation. Instead, in the MS view, change happens when selection brings together masses of infinitesimal effects from many loci simultaneously. In both Darwin’s theory and the Modern Synthesis, adaptation is a multi-threaded tapestry: it is woven by selection bringing together many small fibers simultaneously [4]. This is essential to the creativity claim of neo-Darwinism. If we take it away, then selection is just a filter acting on each thread separately, a theory that Darwin and the architects of the Modern Synthesis disavowed. Again, historical neo-Darwinism, in its dialectical encounter with the mutationist view, insists that adaptation is multi-threaded.

As you might guess at this point, I would argue that back-projection does not merely distort history and divert credit in an unfair way, it is part of a pernicious system of status quo propaganda that perpetually shifts the goal-posts on tradition in a way that undermines the value of truth itself, and suppresses healthy processes in which new findings are recognized and considered for their implications.

A scientific discipline is in a pathological state if a large fraction of of its leaders are unable or unwilling to recognize new findings or award credit to scientists who discover them, and instead confuse the issues and redirect credit and attention to the status quo and to some extremely dead white men like Darwin and Fisher. The up-and-coming scientists in such a discipline will learn that making claims of novelty is either bad science or bad practice, and they will respond by limiting themselves to incremental science, or if they actually have a new theory or a new finding, they will seek to package it as an old idea from some dead authority. A system that ties success to mis-representing what a person cares about the most is a corrosive system.

So, we have good reasons to resist back-projection.

But how does one do it, in practice? I’m not sure, but I’m going to offer some suggestions based on my own experience with a lifetime of trying not to be full of shit. I think this is mainly a matter of being aware of our own bullshitting and demanding greater rigor from ourselves and others, and this benefits from understanding how bullshit works (e.g., the we-have-long-known fallacy, back-projection, etc) and what are the costs to the health and integrity of the scientific process.

Everyone bullshits, in the weak sense of filling in gaps in a story we are telling so that the story works out right, even if we aren’t really sure that we are filling the gaps in an accurate or grounded way. When I described the Sally-Ann test, part was factual (the test, the age-dependent result), but the part about “the mental skill of modeling the perspective of another person” is just an improvised explanation, i.e., I invented it, guided partly by my vague recollection of how the test is interpreted by the experts, but also guided by my intuition and my desire to weave this into a story about perspective and sophistication that works for my narrative purposes, sending a not-so-subtle message to the reader that scientific anachronisms are childish and naïve. To succeed at this kind of improvisation means telling a good story that covers the facts without introducing misrepresentations that are significantly misleading, given the context.

When I wrote (above) about the self-hypnosis of Darwin’s followers, this was an invention, a fiction, but not quite the same, given that it is obviously fictional to a sophisticated reader. This is important. A mature reader encountering that passage will understand immediately that I am poking fun at Darwin’s followers while at the same time suggesting something reasonable and testable: scientists who align culturally with Darwin tend to be blind to the flaws in Darwin’s position. Again, any reasonably sophisticated reader will understand that. However, it would take a high level of sophistication for a reader to surmise that I was improvising in regard to “the mental skill of modeling the perspective of another person.” Part of being a sophisticated and sympathetic reader is knowing how to divide up what the author is saying into the parts that the author wants us to take seriously, and the parts that are just there to make the story flow. Part of being a sophisticated writer is knowing how to write good stories without letting the narrative distort the topic.

So, always remember the difference between facts and stories, and use your reason to figure out which is which. The story of Fisher making a mistake is a constructed story, not a fact. The authors of this story are not citing a journal entry from Fisher’s diaries where he writes “I made a mistake.” Someone looked at the facts and then added the notion of “mistake” to make retrospective sense of those facts. In the sub-functionalization story, the link to Ohno and Hughes is speculative. If ideas had DNA in them, then maybe we could extract the DNA from ideas and trace their ancestry, though I doubt it would be that simple. In any case, there is no DNA trace showing that the DDC model is somehow derived from the published works of Hughes or Ohno. It’s a fact that Ohno talked about redundancy and dosage compensation and so on, and it’s a fact that Hughes proposed his gene-sharing model, but it is not a fact that these earlier ideas led to the DDC model. Someone constructed that story.

By the way, it bears emphasis that the inability to trace ideas definitively is pretty much universal even if one source cites another. How many times have you had an idea and then found a prior source for it? You thought of it, but you are going to cite the prior source. So when one paper cites another for an idea, that doesn’t mean the idea literally came from the previous paper in a causal sense. We often use this “comes from” or “derives from” or “source of” language, but it is an inference. We only know that the prior paper is a source for the idea, not the source of the idea.

Second, gauging the novelty of an idea can be genuinely hard, especially in a field like evolution full of half-baked ideas. If you find that people are normalizing a new result by making statements that were never said before (like the “for a century” claim above), that is a sign that the result is really new. And likewise, if people are reacting to a new result with the same old arguments but the old arguments don’t actually fit the new result, that indicates that new arguments are needed. In generally, the strongest sign of novelty (in my opinion) is misapprehension. When a result or idea is genuinely new, you don’t have a pre-existing mental slot for it. It’s like a book in a completely new genre, so you don’t know where it goes on your bookshelf. The people who are most confident about their mastery are the most likely to shove that unprecedented book into the wrong place and confidently explain why it belongs there using weak arguments. So, when you find that experts are reacting to a new finding by saying really dubious things, this is a strong indicator of novelty.

Third, I recommend to develop a more nuanced or graduated sense of novelty, by distinguishing different types of achievements. Scientific papers are full of ideas, but precisely stated models with verifiable behavior are much rarer. Certain key types of innovations are superior and difficult, and they stand above more mundane scientific accomplishments. One of them is proposing a new method or theory and showing that it works using a model system. Another one is establishing a proposition by a combination of facts and logic. Another one is synthesizing the information on a topic for the first time, i.e., defining a topic.

It should be obvious that stating a possibility X is a different thing from asserting that X is true, which is different again from demonstrating that X is true. Many many authors have suggested that internal factors might shape evolution by influencing tendencies of variation, and many have insisted that this is true, but very few if any have been able to provide convincing evidence (e.g., see Houle, et al 2017 for an attitude of skepticism). Obviously, we may feel some obligation to quote prior sources that merely suggest X as a possibility, but if a contemporary source demonstrates X conclusively, this is something to be applauded and not to be treated as a derivative result. If demonstrating the truth of ideas that already exist is treated as mundane and derivative, our field will become even more littered with half-baked ideas.

If ideas were everything, we would not need scientific methods to turn ideas into theories, develop models, derive implications, evaluate predictions and so on, we would just need people spouting ideas. In particular, authors who propose a theory and make a model [2] that illustrates the theory have done important scientific work and deserve credit for that. If an author presents us with a theory-like idea but there is no model, we often don’t know what to make of the idea.

It seems to me that, in evolutionary biology, we have a surfeit of poorly specified theories. That is, the ostensible theory, the theory-like thing, consists of some set of statements S. We may be able to read all the statements in S but still not understand what it means (e.g., Interaction-Based Evolution), and even if we have a clear sense of what it might mean, we may not be certain that this meaning actually follows from the stated theory. An example of the latter case would be directed mutation. Cairns, et al proposed some ways that this could happen, e.g., via reverse-transcription of a beneficial transcription error that helps a starving cell to survive. If this idea is viable in principle, we should be able to engineer a biological system that does it, or construct a computer model with this behavior. But no one has ever done that, to my knowledge.

This is a huge problem in evolutionary biology. Much of the ancient literature on evolution lacks models, and because of this, lacks the kind of theory that we can really sink our teeth into. Part of this is deliberate in the sense that thinkers like Darwin deliberately avoided the kind of speculative formal theorizing that, today, we consider essential to science. The literature is chock full of unfinished ideas about a broad array of topics, and every time one of those topics comes up, we have to go over all the unfinished ideas again. Poring over that literature is historically interesting but scientifically it is IMHO a huge waste of time because again, ideas are a dime a dozen. If we just threw all those old books into the sea, we would lose some facts and some great hand-drawn figures, but the ideas would all be replenished within a few months because there is a more diverse and far larger group of people at work in science today and they are better trained.

Finally, think of appropriation as a sociopolitical act, as an exercise of power. As explained, even when an idea “comes from” some source, it often doesn’t come from that source in a causal sense. That’s a story we construct. Each case when normalization-appropriation stories are constructed to put the focus on tradition and illustrious ancestors, they re-direct the credit for scientific discoveries to a lineage grounded in tradition that gravitates toward the most important authorities. Telling this kind of story is a performative act and a socio-political act, and it is inherently patristic: it is about legitimizing or normalizing ideas by linking them to ancestors with identifiable reputations, as if we have to establish the pedigree of an idea— and it has to be a good pedigree, one that traces back to the right people. Think about that. Think about all those fairy-tales from the classics to Disney to Star Wars in which the worthy young hero or heroine is, secretly, the offspring of royalty, as if an ordinary person could not be worthy, as if young Alan Force and his colleagues could not have been the intellectual sources of a bold new model of duplicate gene retention, but had to inherit it from Ohno.

Part of our job as scientists operating in a community of practice is to recognize new discoveries, articulate their novelty, and defend them against the damaging effects of minimization and appropriation. The scientists who are marginalized for political or cultural reasons are the least likely to be given credit, and the same scientists may hesitate to promote the novelty of their own work due to the fear of being accused of self-promotion. In this context, it’s up to the rest of us to push back against misappropriation and back-projection and make sure that novelty is recognized appropriately, and that credit is assigned appropriately, bearing in mind that these outcomes make science healthier.

References

- Blanquart, F., Achaz, G., Bataillon, T., Tenaillon, O. (2014) Properties of selected mutations and genotypic landscapes under Fisher’s geometric model. Evolution 68(12), 3537–54 . doi:10.1111/evo.12545

- Buss LW. 1987. The Evolution of Individuality. Princeton: Princeton Univ. Press.

- Charlesworth B. 1996. The good fairy godmother of evolutionary genetics. Curr Biol 6:220.

- Force A, Lynch M, Pickett FB, Amores A, Yan YL, Postlethwait J. 1999. Preservation of duplicate genes by complementary, degenerative mutations. Genetics 151:1531-1545.

- Gayon J. 1998. Darwinism’s Struggle for Survival: Heredity and the Hypothesis of Natural Selection. Cambridge, UK: Cambridge University Press.

- Haldane JBS. 1935. The rate of spontaneous mutation of a human gene. Journ. of Genetics 31:317-326.

- Haldane JB. 1947. The mutation rate of the gene for haemophilia, and its segregation ratios in males and females. Annals of Eugenics 13:262-271.

- Haldane J.B.S. The formal genetics of man. Proc. R. Soc. B. 1948;135:147–170.

- Hughes AL. 1994. The evolution of functionally novel proteins after gene duplication. Proc. R. Soc. Lond. B 256:119-124.

- Kimura M. 1983. The Neutral Theory of Molecular Evolution. Cambridge: Cambridge University Press.

- Matuszewski, S., Hermisson, J., Kopp, M. (2014) Fisher’s geometric model with a moving optimum. Evolution 68(9), 2571–88 doi:10.1111/evo.12465

- Ohno S. 1970. Evolution by Gene Duplication. New York: Springer-Verlag.

- Orr, H.A (2005a) The genetic theory of adaptation: a brief history. Nat Rev Genet 6(2), 119–27

- Orr, H.A. (2005b) Theories of adaptation: what they do and don’t say. Genetica 123(1-2), 3–13

- Rockman MV. 2012. The QTN program and the alleles that matter for evolution: all that’s gold does not glitter. Evolution 66:1-17.

- Blanquart, F., Achaz, G., Bataillon, T., Tenaillon, O. (2014) Properties of selected mutations and genotypic landscapes under Fisher’s geometric model. Evolution 68(12), 3537–54 . doi:10.1111/evo.12545

- Charlesworth B. 1996. The good fairy godmother of evolutionary genetics. Curr Biol 6:220.

- Force A, Lynch M, Pickett FB, Amores A, Yan YL, Postlethwait J. 1999. Preservation of duplicate genes by complementary, degenerative mutations. Genetics 151:1531-1545.

- Gayon J. 1998. Darwinism’s Struggle for Survival: Heredity and the Hypothesis of Natural Selection. Cambridge, UK: Cambridge University Press.

- Haldane JBS. 1935. The rate of spontaneous mutation of a human gene. Journ. of Genetics 31:317-326.

- Hughes AL. 1994. The evolution of functionally novel proteins after gene duplication. Proc. R. Soc. Lond. B 256:119-124.

- Kimura M. 1983. The Neutral Theory of Molecular Evolution. Cambridge: Cambridge University Press.

- Li WH, Yi S, Makova K. 2002. Male-driven evolution. Curr Opin Genet Dev 12:650-656.

- Matuszewski, S., Hermisson, J., Kopp, M. (2014) Fisher’s geometric model with a moving optimum. Evolution 68(9), 2571–88 doi:10.1111/evo.12465

- Ohno S. 1970. Evolution by Gene Duplication. New York: Springer-Verlag.

- Orr, H.A (2005a) The genetic theory of adaptation: a brief history. Nat Rev Genet 6(2), 119–27

- Orr, H.A. (2005b) Theories of adaptation: what they do and don’t say. Genetica 123(1-2), 3–13

- Rockman MV. 2012. The QTN program and the alleles that matter for evolution: all that’s gold does not glitter. Evolution 66:1-17.

Notes

- Actually, in the evolutionary literature, traditionalists do not assume that all past thinkers saw the same theories we use today, but only the past thinkers considered to be righteous by Synthesis standards, i.e., the ones from the neo-Darwinian tradition. Scientists from outside the tradition are understood to have been bonkers.

- In the philosophy of science, a model is a thing that makes all of the statements in a theory true. So, to borrow an example from Elisabeth Lloyd, suppose a theory says that A is touching B, and B is touching C, but A and C are not touching. We could make a model of this using 3 balls labeled A, B and C. Or we could point to 3 adjacent books on a shelf, label them A, B and C, and call that a model of the theory.

- I’m going to write something separate about Monroe, et al. (2022) after about 6 months have passed.

- In Darwin’s original theory, the fibers in that tapestry blend together, acting like fluids. The resulting tapestry cannot be resolved into threads anymore, because they lose their individuality under blending. A trait cannot be dissected in terms of particulate contributions, but may be explained only by a mass flow guided by the hand of selection. This is why Darwin’s says that, on his theory, natura non facit saltum must be strictly true.

- In some instances of reactions to Monroe, et al., 2022, this actually worked well, in the sense that people like Jianzhi Zhang and Laurence Hurst— qualified experts who were sympathetic, genuinely interested, and critical— were called on to comment.

- Google ngrams data shows an acceleration of references to “Mendel” after 1900; there are earlier references, but upon examination (based on looking at about 10 of them), these are references to legal suits and other mundane circumstances involving persons with a given name or surname of Mendel. Scholars of de Vries such as Stamhuis believe that de Vries understood Mendelian ratios well before 1900, and before he discovered Mendel’s paper.

- Note that one often sees a complementary fallacy in which reform of evolutionary theory is demanded on the basis of the discovery and scientific recognition of some phenomenon X, usually from genetics or molecular biology. That is, the reformist fallacy is “X is a non-traditional finding in genetics therefore we have to modify evolutionary theories,” whereas the traditionalist fallacy is “we have long known about X therefore we do not have to change any evolutionary theories.” Both versions of the argument are made in regard to epigenetics. Shapiro’s (2011) entire book rests on the reformist fallacy where X = HGT, transposition, etc, and Dean’s (2012) critical review of Shapiro (2011) relies on the traditionalist fallacy of asserting that if “Darwinians” study X, it automagically becomes part of a “Darwinian” theory: “Horizontal gene transfer, symbiotic genome fusions, massive genome restructuring…, and dramatic phenotypic changes based on only a few amino acid replacements are just some of the supposedly non-Darwinian phenomena routinely studied by Darwinists”. What Dean is describing here are saltations, which are not compatible with a gradualist theory, i.e., a theory that takes natura non facit saltum as a doctrine. Whatever part of evolution is based on these saltations, that is the part that requires some evolutionary theory for where jumps come from, i.e., what is the character and frequency of variational jumps, and how are they incorporated in evolution.

- I later discovered sources for the Haldane 1935 fallacy. As noted, Haldane (1935) merely designates abstract variables mu and nu for the female and male mutation rates, without claiming that there is any difference. By contrast, Haldane (1947) clearly uses available data to argue that the male mutation rate is higher, and he offers some possible biological reasons:

If the difference between the sexes is due to mutation rather than crossing over, many explanations could be suggested. The primordial oocytes are mostly if not all formed at birth, whereas spermatogonia go on dividing throughout the sexual life of a male. So if mutation is due to faulty copying of genes at a nuclear division, we might expect it to be commoner in males than females. Again the chromosomes in human oocytes appear to pass most of their time in the pachytene stage. If this is relatively invulnerable to radiation and other influences, the difference is explicable. On either of these hypotheses we should expect higher mutability in the male to be a general property of human and perhaps other vertebrate genes. It is difficult to see how this could be proved or disproved for many years to come

As early as 2000, authors began to cite the 1935 paper together with the 1947 and 1948 papers as the source of male mutation bias (e.g., Huttley, et al. 2000). The first paper I have seen that clearly makes a mistaken attribution is Li, et al 2002Almost 70 years ago, Haldane [1] proposed that the male mutation rate in humans is much higher than the female mutation rate because the male germline goes through many more rounds of cell divisions (DNA replications) per generation than does the female germline. Under this hypothesis, mutations arise mainly in males, so that evolution is ‘male-driven’ [2]

The first citation is to Haldane (1935), and the second is to Miyata, et al 1987. Note that Li, et al. not only erroneously attribute male mutation bias to Haldane (1935), they also seem to equate this with an evolutionary hypothesis of male-driven evolution from Miyata, et al.